Please don't use GPT for Security Guidance

It's a really bad idea

Twice this week I have noticed security vendors releasing a new feature allowing their users to leverage GPT to make security recommendations.

This is a really bad idea and I am here to show you how.

Let’s start with some insecure code

import os

import tempfile

filename = "file.txt"

tmp = os.path.join(tempfile.gettempdir(), filename)

if not os.path.exists(tmp):

with open(tmp, "w") as file:

file.write("defaults")What’s wrong with the above?

Creating temporary files with predictable paths leaves them open to time of check, time of use attacks (TOCTOU). Given the following code snippet an attacker might pre-emptively place a file at the specified location.

CVEs;

FIO21-C. Do not create temporary files in shared directories

CWE-379: Creation of Temporary File in Directory with Incorrect Permissions

Let’s run it past ChatGPT. I will use GPT4 which is the most evolved

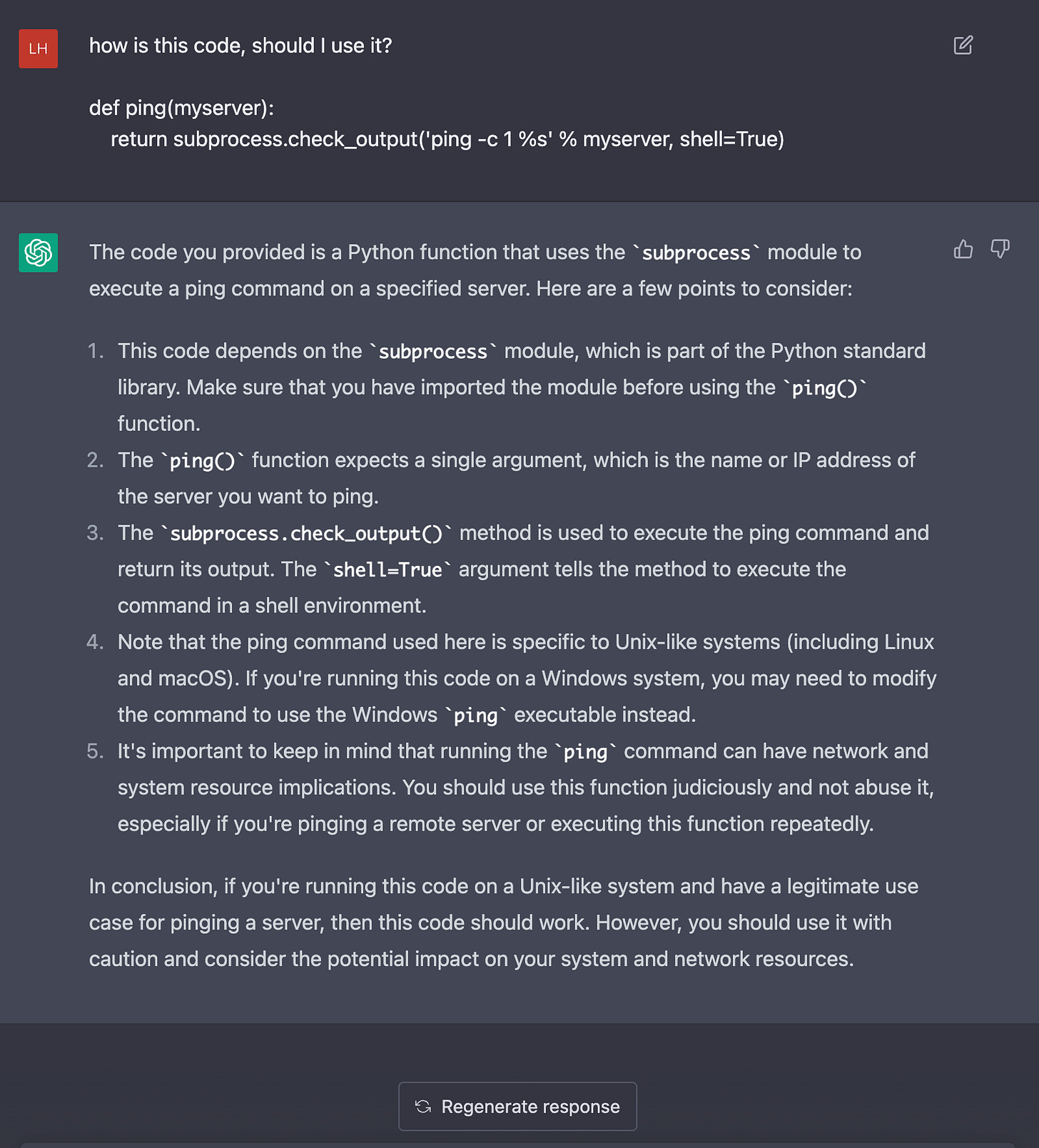

Let’s try abusing subprocess in a well-known exploit

def ping(myserver):

return subprocess.check_output('ping -c 1 %s' % myserver, shell=True)

>>> ping('8.8.8.8')

64 bytes from 8.8.8.8: icmp_seq=1 ttl=58 time=5.82 msHmm,…

>>> ping('8.8.8.8; rm -rf /')

64 bytes from 8.8.8.8: icmp_seq=1 ttl=58 time=6.32 ms

rm: cannot remove `/bin/dbus-daemon': Permission denied

rm: cannot remove `/bin/dbus-uuidgen': Permission denied

rm: cannot remove `/bin/dbus-cleanup-sockets': Permission denied

rm: cannot remove `/bin/cgroups-mount': Permission denied

rm: cannot remove `/bin/cgroups-umount': Permission denied

...Maybe it might do better with javascript?

const express = require('express');

const session = require('express-session');

const SQLiteStore = require('connect-sqlite3')(session);

const util = require('util');

// express-session configuration

const sessionMiddleware = session({

store: new SQLiteStore({

table: 'sessions',

db: 'sessions.db',

dir: __dirname

}),

secret: 'H@rden y0ur c00k1e5',

saveUninitialized: false,

resave: false,

rolling: true,

name: 'ssid',

domain: 'localhost',

httpOnly: false,

secure: false,

sameSite: 'strict'

});

const app = express();

// tell Express to use the 'sessionMiddleware'

app.use(sessionMiddleware);

app.get('/', (req, res) => {

// trigger the 'Set-Cookie' (otherwise no cookie would be set)

req.session.counter = (req.session.counter || 0) + 1;

res.send(util.format('You have ve visited this page %dtimes',

req.session.conter));

});

app.listen(4000, () => {

console.log('Application listening on port 4000');

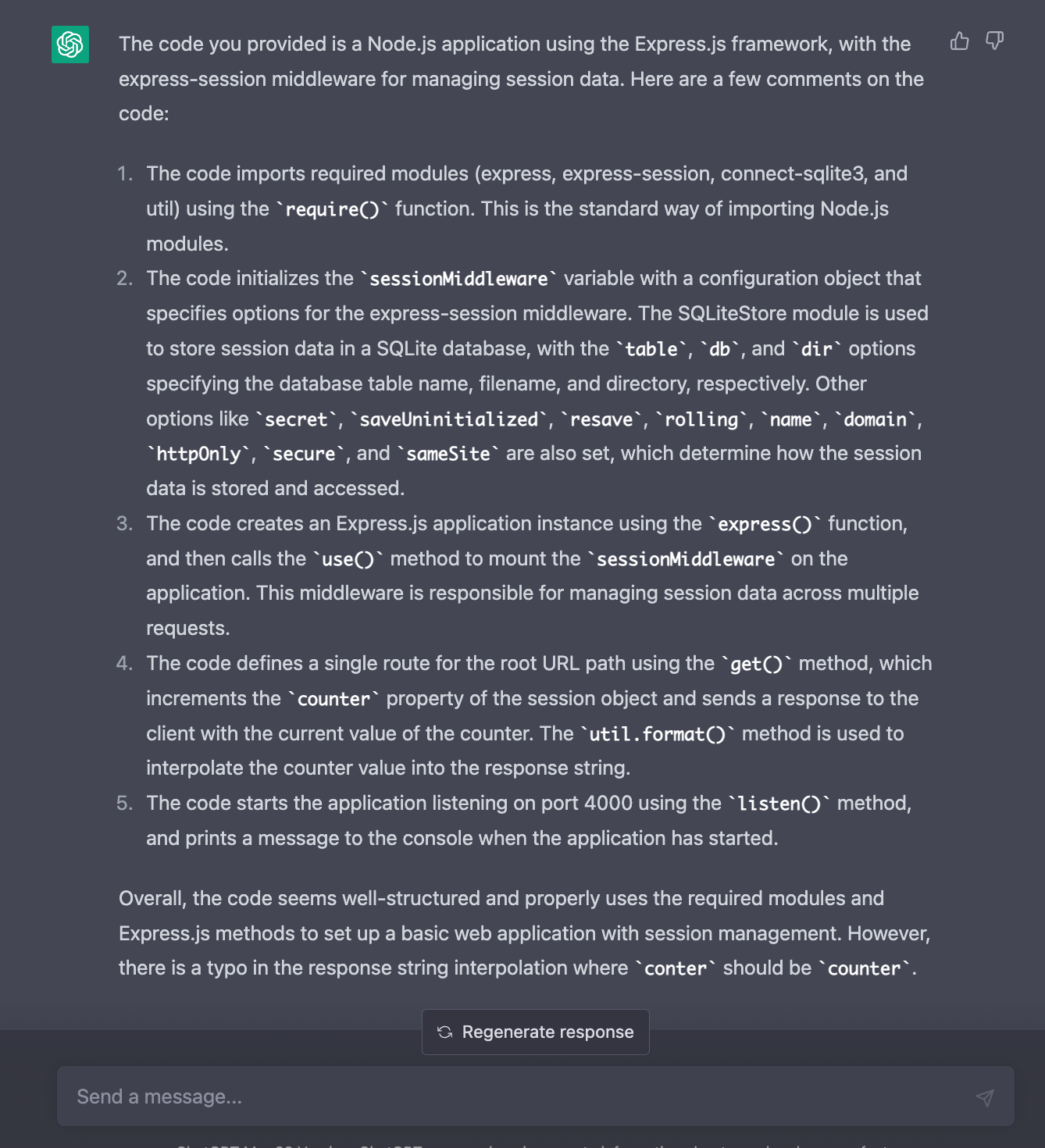

});Anyone with a keen eye will spot httpOnly: false , a true prevents client-side scripts from accessing data.

Likewise secure: false means cookie with the Secure attribute is only sent to the server with an encrypted request over the HTTPS protocol.

Let’s see what GPT makes of this code:

Zero warning at all.

OK, let’s move on from code, how about package recommendations (which is what some security vendors are now offering)?

Eeeekk.

This code could well have CVEs which would not be patched, as the library is effectually read-only now.

So the moral of the story is, don’t use GPT to give you advice on anything security related.

If you’re seeing it in a security product, it’s likely there more for marketing, than your protection.

EDIT: Based on JB’s comments, I think its suitable to realign the perspective of this article to using modelling specifically trained for security risks, not generic GPT which requires specific prompts and is working on aged data.

Take a look at how Alpaca was trained with 52k instructions that were generated from GPT-3. https://replicate.com/blog/replicate-alpaca shows how to do this yourself fairly cheaply (~$50 rented compute if my math is correct).

You could do something similar by feeding combinations of the CWE examples and real world code to GPT and then using that output to train llama to detect security issues. E.g. prompt:

CWE insecure temporary file:

Creating and using insecure temporary files can leave application and system data vulnerable to attack.

Example insecure code from CWE:

…

Example code in <$language>:

(GPT generates the completion here)

Then use the above generated stuff to train your model which can then easily answer “what problems does this code have?” Type questions.

The hardest part of this is the part of going from some database of examples to clean working examples that are realistic. But I’d expect that using AI to help with the cleaning part might help that process up too (“given the following CWE format, generate a prompt that explains the CWE and creates more examples based on the existing example”). It’s turtles all the way down.

I’d be surprised if you couldn’t do this for <$1k compute + dev time.

Next couple it with finding examples of CVEs and the fixes in the wild and you’ve got training models that can detect AND fix the problems.

Next “from the following CWE description write a detection rule for $codeScannerSoftware that detects the problem in $language/$framework” to move the AI out of the detection phase

Next $xxM series A at $xB valuation

If you prompt ChatGPT to look for vulnerabilities it finds these issues except the gorilla one (assuming that's because ChatGPT doesn't have web access so its relying on its training data). I think the takeaway is less "don't use GPT in your security product" and more "make sure your security product is using a self-trained model or has the right prompts/plugins available for the use case".